How to Read Hours From Date From Csv Python

In [ane]: import pandas as pd In [two]: import matplotlib.pyplot equally plt -

Air quality data

For this tutorial, air quality data virtually \(NO_2\) and Particulate thing less than two.5 micrometers is used, made available by openaq and downloaded using the py-openaq bundle. The

air_quality_no2_long.csv"data prepare provides \(NO_2\) values for the measurement stations FR04014, BETR801 and London Westminster in respectively Paris, Antwerp and London.To raw data

In [3]: air_quality = pd . read_csv ( "information/air_quality_no2_long.csv" ) In [four]: air_quality = air_quality . rename ( columns = { "date.utc" : "datetime" }) In [five]: air_quality . head () Out[v]: city state datetime location parameter value unit of measurement 0 Paris FR 2019-06-21 00:00:00+00:00 FR04014 no2 20.0 µg/m³ 1 Paris FR 2019-06-xx 23:00:00+00:00 FR04014 no2 21.eight µg/g³ 2 Paris FR 2019-06-20 22:00:00+00:00 FR04014 no2 26.5 µg/g³ 3 Paris FR 2019-06-twenty 21:00:00+00:00 FR04014 no2 24.9 µg/m³ 4 Paris FR 2019-06-20 20:00:00+00:00 FR04014 no2 21.4 µg/thou³

In [half-dozen]: air_quality . urban center . unique () Out[6]: array(['Paris', 'Antwerpen', 'London'], dtype=object)

How to handle time series data with ease?¶

Using pandas datetime properties¶

-

I desire to work with the dates in the column

datetimeas datetime objects instead of plain textIn [7]: air_quality [ "datetime" ] = pd . to_datetime ( air_quality [ "datetime" ]) In [8]: air_quality [ "datetime" ] Out[viii]: 0 2019-06-21 00:00:00+00:00 one 2019-06-20 23:00:00+00:00 two 2019-06-20 22:00:00+00:00 3 2019-06-20 21:00:00+00:00 four 2019-06-20 twenty:00:00+00:00 ... 2063 2019-05-07 06:00:00+00:00 2064 2019-05-07 04:00:00+00:00 2065 2019-05-07 03:00:00+00:00 2066 2019-05-07 02:00:00+00:00 2067 2019-05-07 01:00:00+00:00 Name: datetime, Length: 2068, dtype: datetime64[ns, UTC]

Initially, the values in

datetimeare character strings and exercise non provide whatsoever datetime operations (e.one thousand. extract the year, 24-hour interval of the week,…). Past applying theto_datetimefunction, pandas interprets the strings and convert these to datetime (i.e.datetime64[ns, UTC]) objects. In pandas we call these datetime objects similar todatetime.datetimefrom the standard library equallypandas.Timestamp.

Note

As many information sets do contain datetime information in one of the columns, pandas input office similar pandas.read_csv() and pandas.read_json() can practice the transformation to dates when reading the information using the parse_dates parameter with a listing of the columns to read equally Timestamp:

pd . read_csv ( "../information/air_quality_no2_long.csv" , parse_dates = [ "datetime" ]) Why are these pandas.Timestamp objects useful? Let's illustrate the added value with some example cases.

What is the outset and end date of the time series information set we are working with?

In [nine]: air_quality [ "datetime" ] . min (), air_quality [ "datetime" ] . max () Out[nine]: (Timestamp('2019-05-07 01:00:00+0000', tz='UTC'), Timestamp('2019-06-21 00:00:00+0000', tz='UTC')) Using pandas.Timestamp for datetimes enables us to calculate with appointment data and make them comparable. Hence, nosotros can use this to get the length of our time serial:

In [x]: air_quality [ "datetime" ] . max () - air_quality [ "datetime" ] . min () Out[10]: Timedelta('44 days 23:00:00') The result is a pandas.Timedelta object, similar to datetime.timedelta from the standard Python library and defining a time duration.

To user guide

The various time concepts supported by pandas are explained in the user guide section on fourth dimension related concepts.

-

I want to add together a new column to the

DataFramecontaining only the month of the measurementIn [11]: air_quality [ "calendar month" ] = air_quality [ "datetime" ] . dt . month In [12]: air_quality . head () Out[12]: city country datetime location parameter value unit month 0 Paris FR 2019-06-21 00:00:00+00:00 FR04014 no2 20.0 µg/m³ 6 one Paris FR 2019-06-20 23:00:00+00:00 FR04014 no2 21.viii µg/m³ 6 2 Paris FR 2019-06-xx 22:00:00+00:00 FR04014 no2 26.5 µg/m³ 6 iii Paris FR 2019-06-twenty 21:00:00+00:00 FR04014 no2 24.9 µg/k³ 6 four Paris FR 2019-06-xx 20:00:00+00:00 FR04014 no2 21.4 µg/k³ 6

By using

Timestampobjects for dates, a lot of time-related backdrop are provided past pandas. For case themonth, but tooyear,weekofyear,quarter,… All of these properties are accessible by thedtaccessor.

-

What is the average \(NO_2\) concentration for each day of the week for each of the measurement locations?

In [13]: air_quality . groupby ( ....: [ air_quality [ "datetime" ] . dt . weekday , "location" ])[ "value" ] . mean () ....: Out[13]: datetime location 0 BETR801 27.875000 FR04014 24.856250 London Westminster 23.969697 one BETR801 22.214286 FR04014 30.999359 ... 5 FR04014 25.266154 London Westminster 24.977612 6 BETR801 21.896552 FR04014 23.274306 London Westminster 24.859155 Proper noun: value, Length: 21, dtype: float64

Remember the split-apply-combine pattern provided by

groupbyfrom the tutorial on statistics calculation? Here, we want to calculate a given statistic (eastward.g. mean \(NO_2\)) for each weekday and for each measurement location. To group on weekdays, we use the datetime propertyweekday(with Monday=0 and Dominicus=6) of pandasTimestamp, which is also accessible by thedtaccessor. The grouping on both locations and weekdays can be done to split the calculation of the mean on each of these combinations.Danger

As we are working with a very short fourth dimension series in these examples, the analysis does non provide a long-term representative result!

-

Plot the typical \(NO_2\) blueprint during the day of our fourth dimension series of all stations together. In other words, what is the average value for each hour of the solar day?

In [14]: fig , axs = plt . subplots ( figsize = ( 12 , 4 )) In [15]: air_quality . groupby ( air_quality [ "datetime" ] . dt . hour )[ "value" ] . mean () . plot ( ....: kind = 'bar' , rot = 0 , ax = axs ....: ) ....: Out[15]: <AxesSubplot:xlabel='datetime'> In [sixteen]: plt . xlabel ( "Hour of the day" ); # custom x label using matplotlib In [17]: plt . ylabel ( "$NO_2 (µg/m^3)$" );

Similar to the previous case, we want to calculate a given statistic (east.g. mean \(NO_2\)) for each hour of the twenty-four hour period and we tin use the split-employ-combine arroyo once more. For this case, we use the datetime property

hrof pandasTimestamp, which is as well accessible by thedtaccessor.

Datetime as index¶

In the tutorial on reshaping, pivot() was introduced to reshape the data table with each of the measurements locations as a split up column:

In [18]: no_2 = air_quality . pivot ( index = "datetime" , columns = "location" , values = "value" ) In [nineteen]: no_2 . head () Out[nineteen]: location BETR801 FR04014 London Westminster datetime 2019-05-07 01:00:00+00:00 50.5 25.0 23.0 2019-05-07 02:00:00+00:00 45.0 27.7 nineteen.0 2019-05-07 03:00:00+00:00 NaN 50.4 xix.0 2019-05-07 04:00:00+00:00 NaN 61.9 xvi.0 2019-05-07 05:00:00+00:00 NaN 72.4 NaN Note

Past pivoting the data, the datetime information became the index of the table. In general, setting a cavalcade as an index can exist achieved by the set_index function.

Working with a datetime alphabetize (i.e. DatetimeIndex ) provides powerful functionalities. For example, nosotros do not need the dt accessor to get the time series properties, simply have these properties available on the index directly:

In [20]: no_2 . index . year , no_2 . index . weekday Out[20]: (Int64Index([2019, 2019, 2019, 2019, 2019, 2019, 2019, 2019, 2019, 2019, ... 2019, 2019, 2019, 2019, 2019, 2019, 2019, 2019, 2019, 2019], dtype='int64', name='datetime', length=1033), Int64Index([1, one, i, ane, 1, 1, 1, 1, i, i, ... three, 3, three, 3, iii, 3, 3, 3, 3, 4], dtype='int64', name='datetime', length=1033)) Some other advantages are the user-friendly subsetting of time period or the adapted time scale on plots. Let'due south use this on our data.

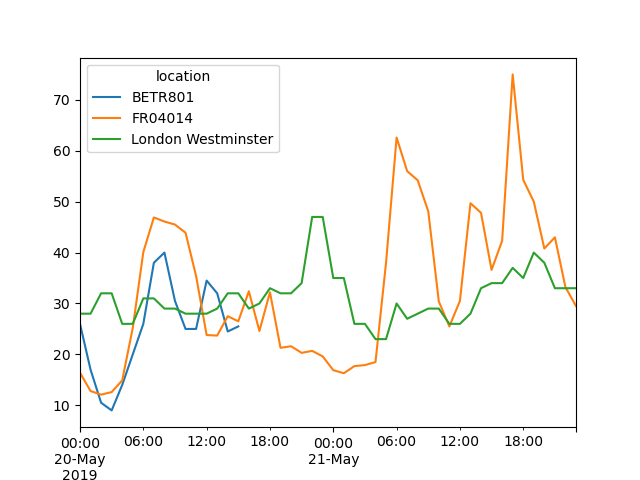

-

Create a plot of the \(NO_2\) values in the different stations from the 20th of May till the finish of 21st of May

In [21]: no_2 [ "2019-05-twenty" : "2019-05-21" ] . plot ();

Past providing a string that parses to a datetime, a specific subset of the data can be selected on a

DatetimeIndex.

To user guide

More than information on the DatetimeIndex and the slicing by using strings is provided in the department on time series indexing.

Resample a time series to another frequency¶

-

Aggregate the current hourly time serial values to the monthly maximum value in each of the stations.

In [22]: monthly_max = no_2 . resample ( "K" ) . max () In [23]: monthly_max Out[23]: location BETR801 FR04014 London Westminster datetime 2019-05-31 00:00:00+00:00 74.5 97.0 97.0 2019-06-30 00:00:00+00:00 52.five 84.7 52.0

A very powerful method on time series data with a datetime index, is the ability to

resample()fourth dimension series to another frequency (e.1000., converting secondly data into five-minutely data).

The resample() method is similar to a groupby functioning:

-

information technology provides a time-based grouping, past using a cord (e.g.

M,5H,…) that defines the target frequency -

it requires an aggregation function such as

mean,max,…

When defined, the frequency of the time serial is provided by the freq aspect:

In [24]: monthly_max . index . freq Out[24]: <MonthEnd> -

Make a plot of the daily mean \(NO_2\) value in each of the stations.

In [25]: no_2 . resample ( "D" ) . mean () . plot ( manner = "-o" , figsize = ( 10 , 5 ));

To user guide

More details on the power of fourth dimension serial resampling is provided in the user guide section on resampling.

Think

-

Valid appointment strings tin can be converted to datetime objects using

to_datetimefunction or every bit part of read functions. -

Datetime objects in pandas support calculations, logical operations and convenient appointment-related properties using the

dtaccessor. -

A

DatetimeIndexcontains these engagement-related properties and supports convenient slicing. -

Resampleis a powerful method to change the frequency of a fourth dimension series.

Source: https://pandas.pydata.org/docs/getting_started/intro_tutorials/09_timeseries.html

0 Response to "How to Read Hours From Date From Csv Python"

Post a Comment